Lecture

7/11/25

Working with the Machine

Reflections from my lecture on vibe coding for designers

The work this lecture focused on began, like nearly all of my self-initiated projects, with a question — “I wonder…?” “I wonder what these two shapes might look like together?” “I wonder if I could do that?”

I’ve always been interested in the role chance plays in art. Ever since picking up the great retrospective on Dadaism — Dada: Zurich, Berlin, Hannover, Cologne, New York, Paris — and learning about Hans Arp’s famous collage experiments, where he allowed chance to influence his compositions, I’ve looked for ways to bring a similar sense of unpredictability into my work. Analog or digital, I’m drawn to the unexpected, to structured randomness, and to the processes that introduce the Poetic Gap: the space where meaning is co-created between artist and viewer.

My initial experiments with vibe coding emerged from that ongoing curiosity. I’m not a developer, though I’ve been around them much of my career, and I’ve taken a few coding classes that gave me a general sense of how basic HTML and CSS work. Recently, I’ve begun studying creative coding with Processing. Using AI has helped me work through ideas faster and understand what more complex code does. It fills in the skills I don’t fully have yet. And while I appreciate that AI can “just make it work,” I find I’m most satisfied when I actually understand what the code is doing. I’m a big believer in lifelong learning, so I don’t want the tool to simply do it for me; I want to understand it — when to use it, how to use it, and what it’s actually doing.

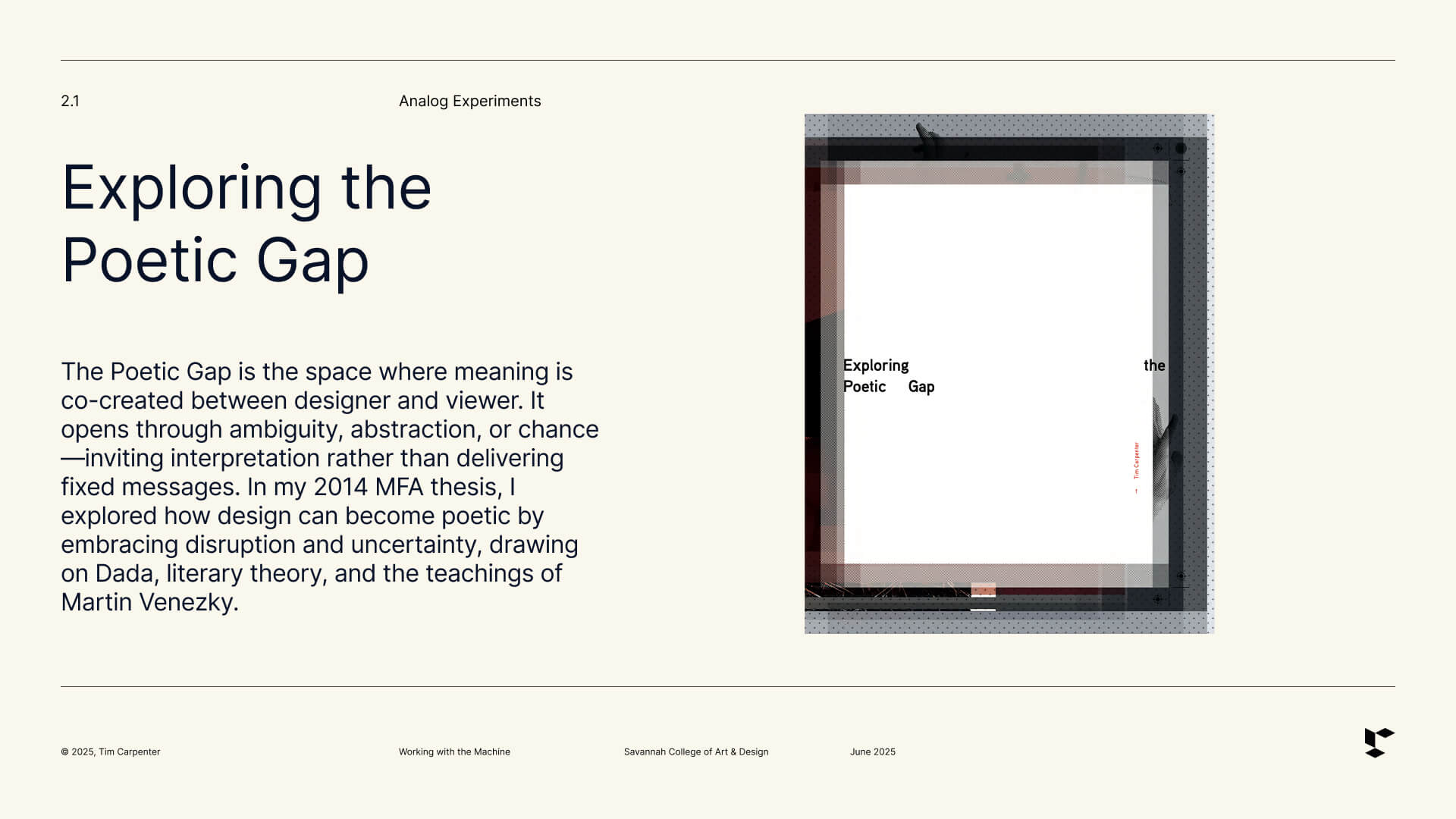

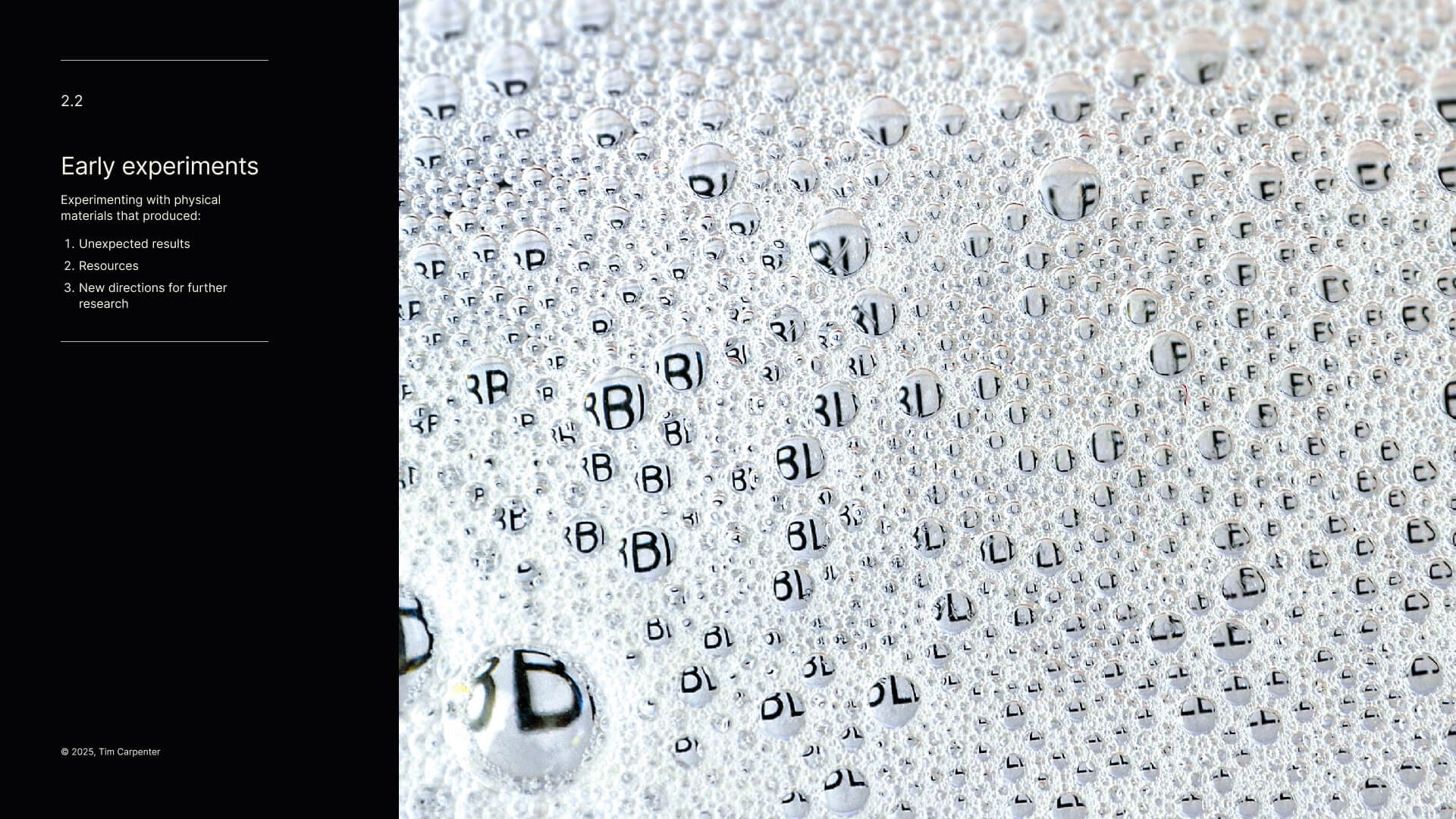

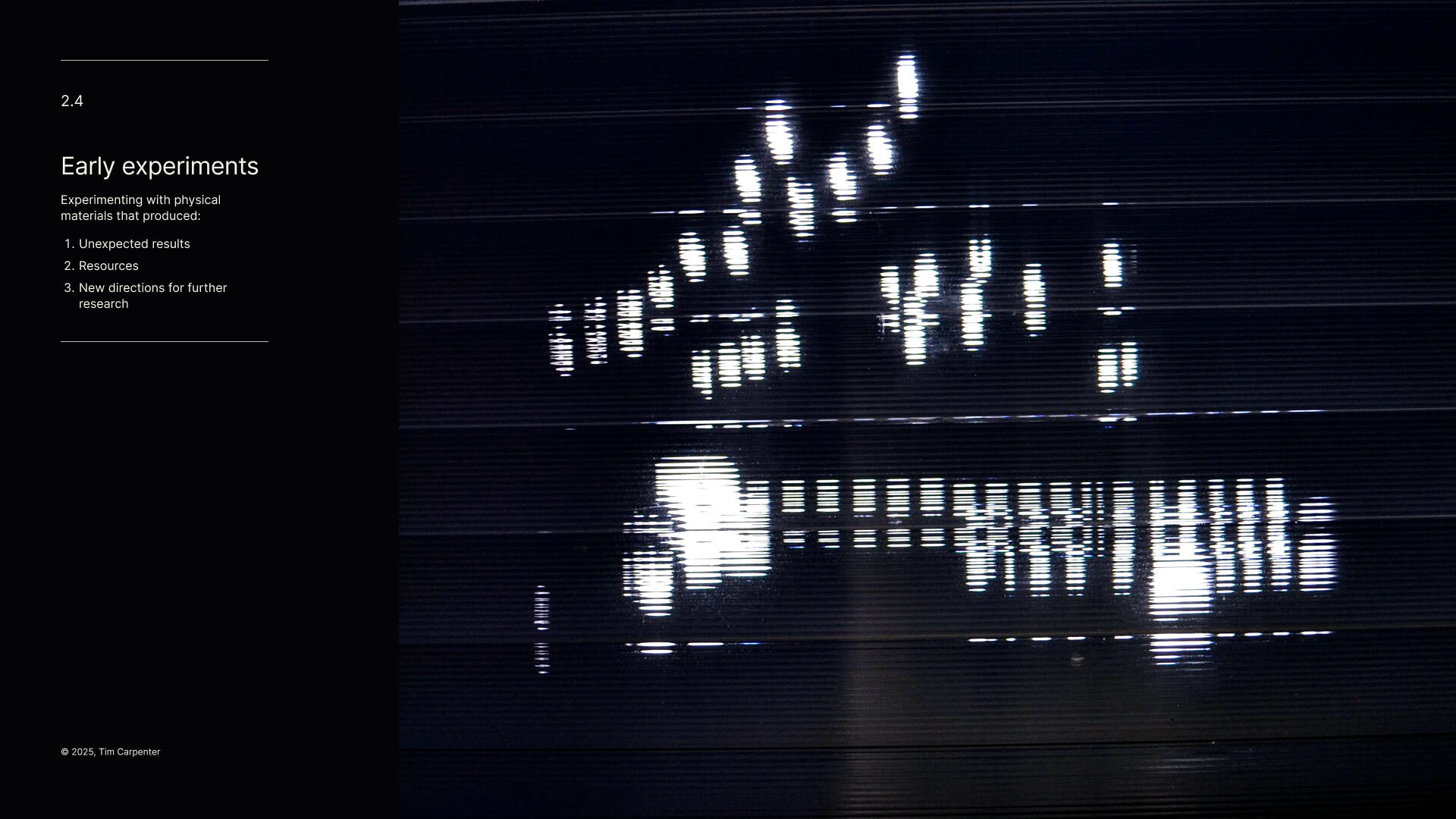

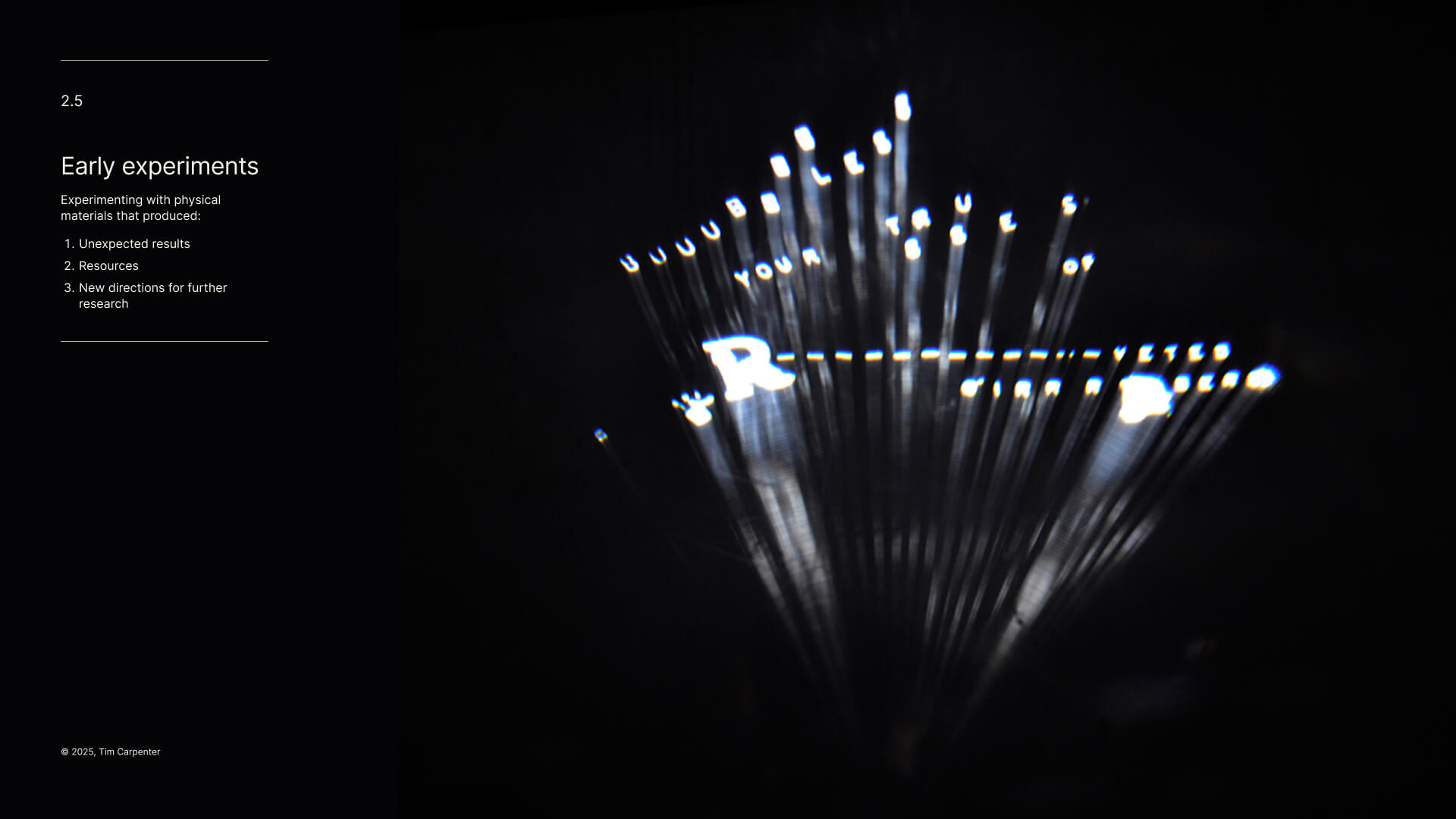

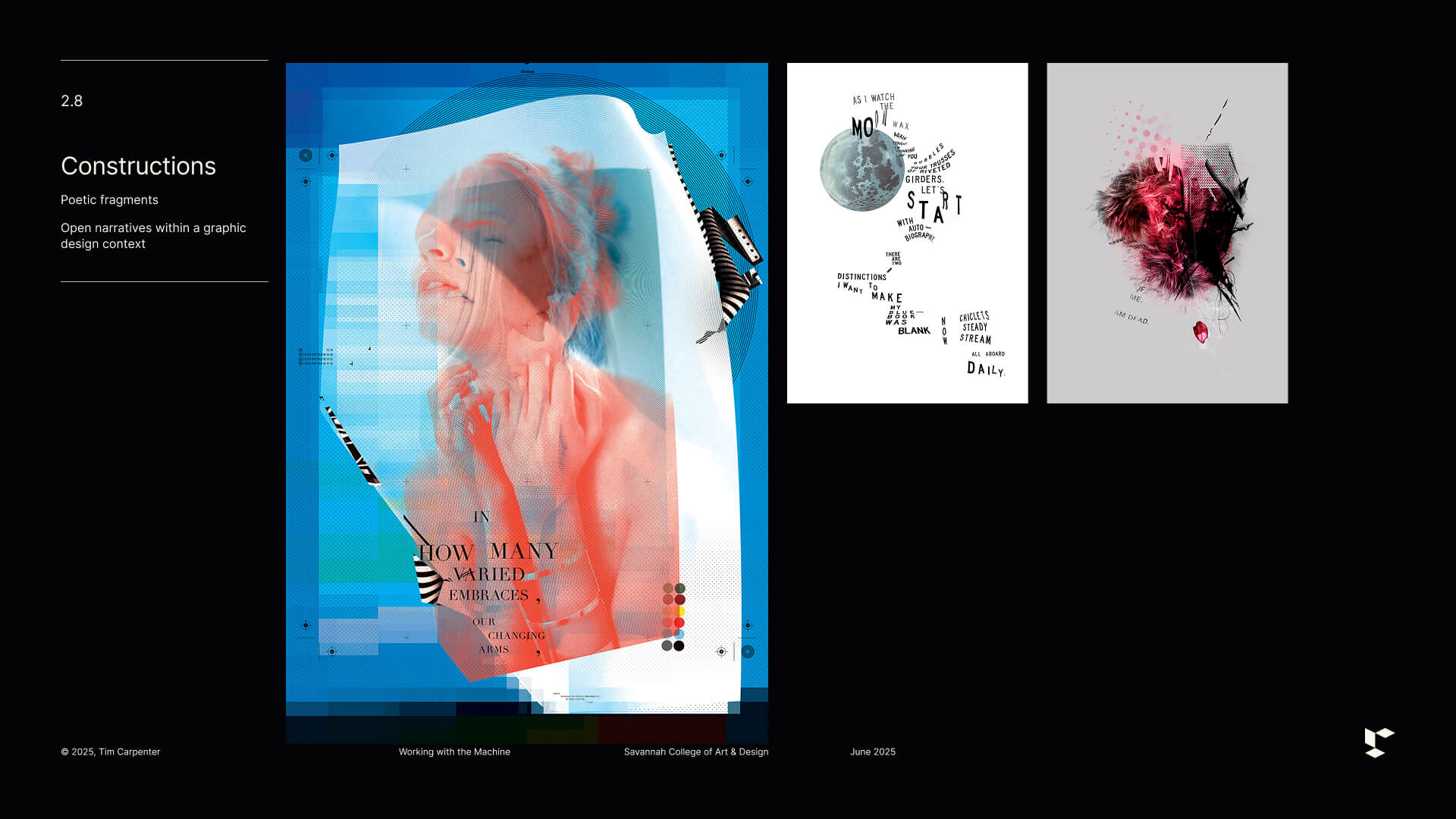

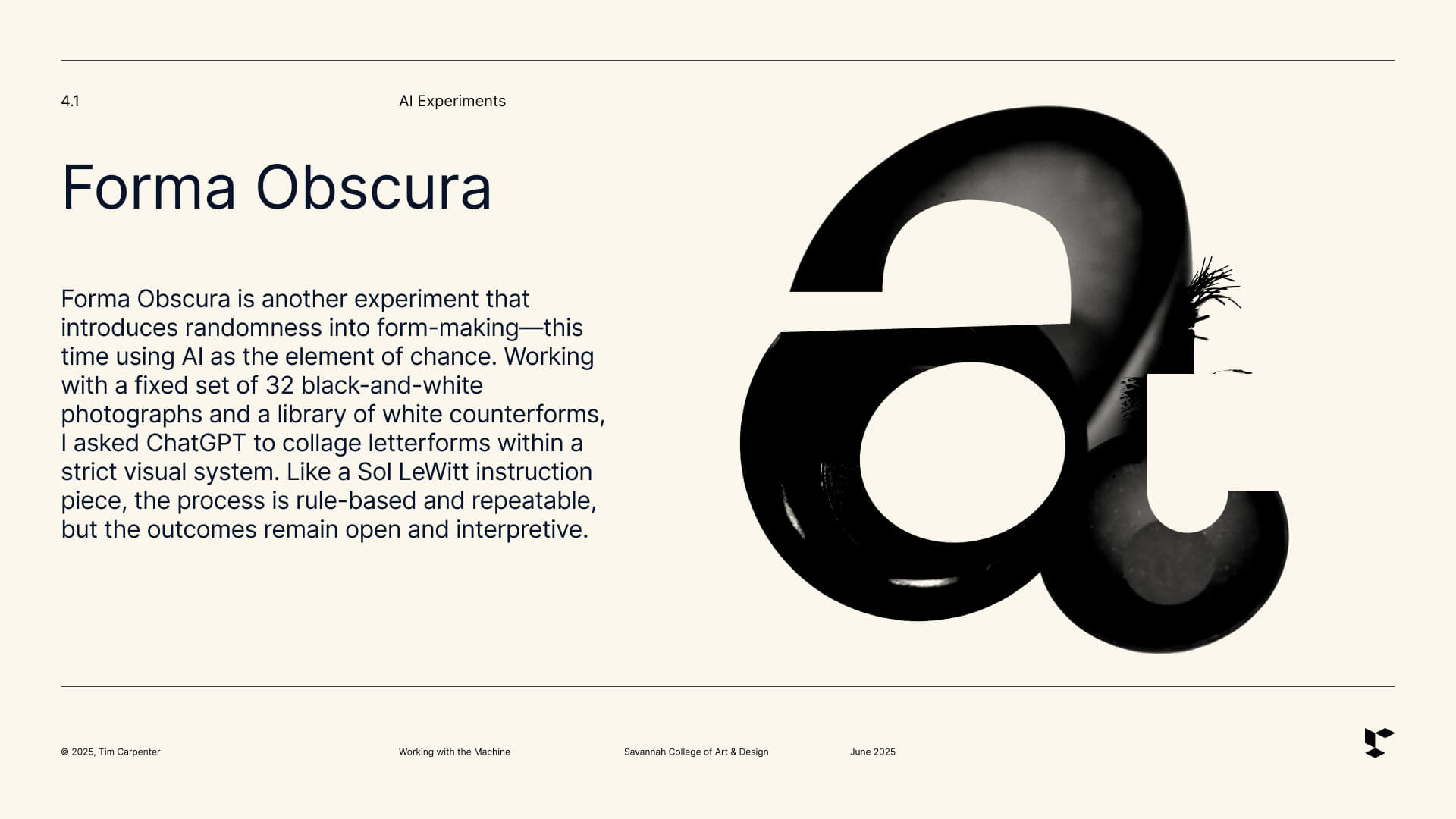

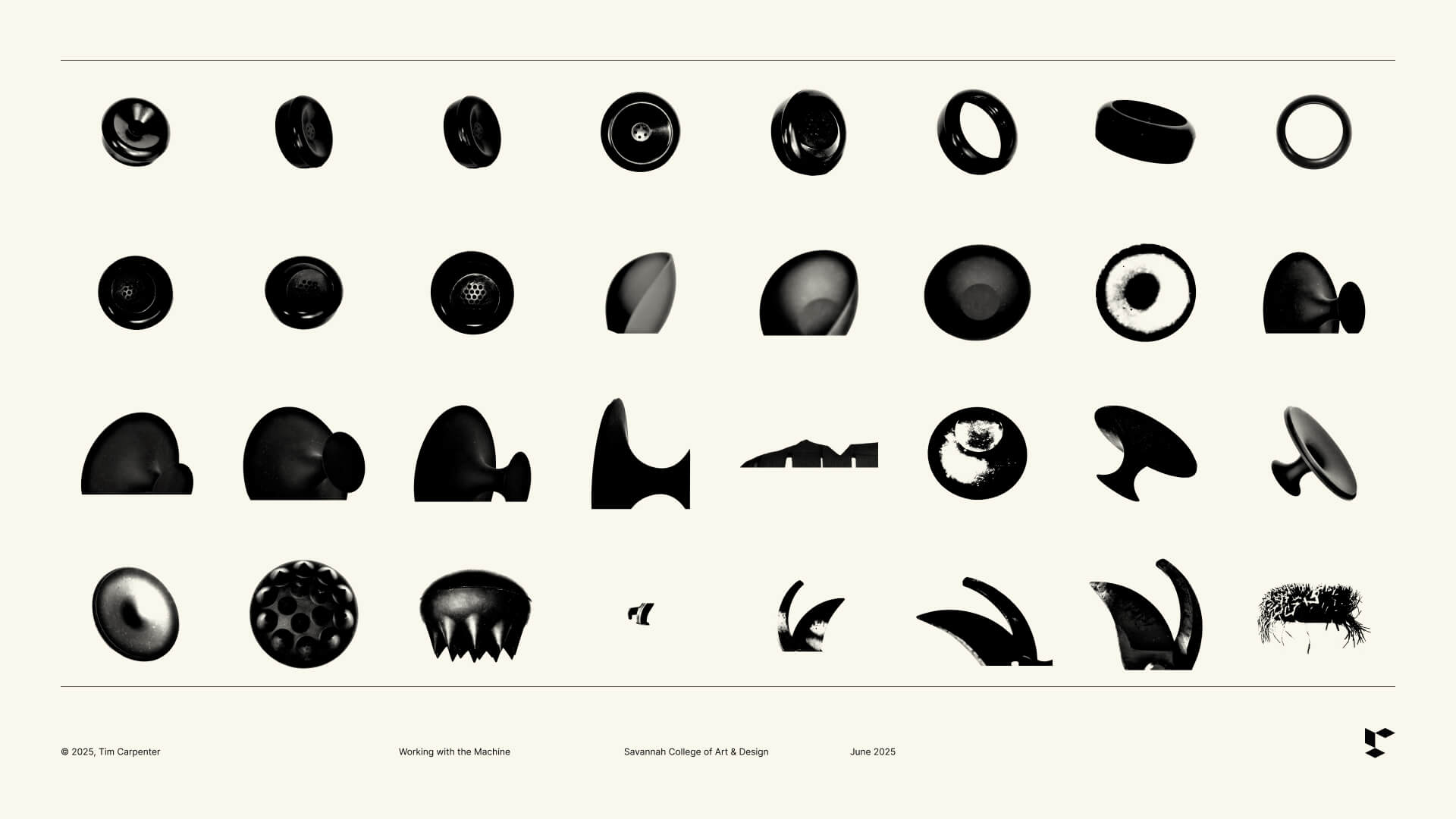

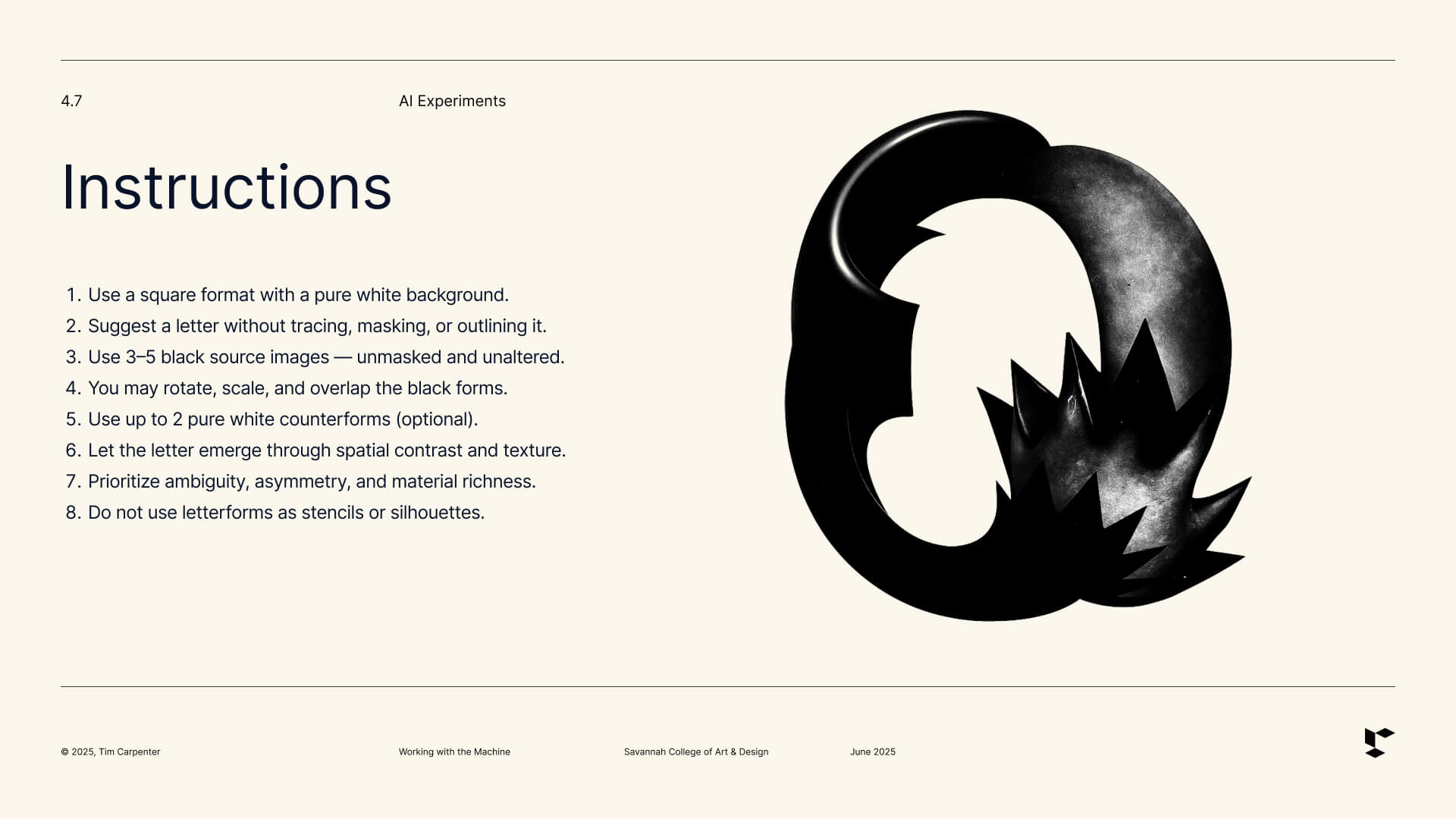

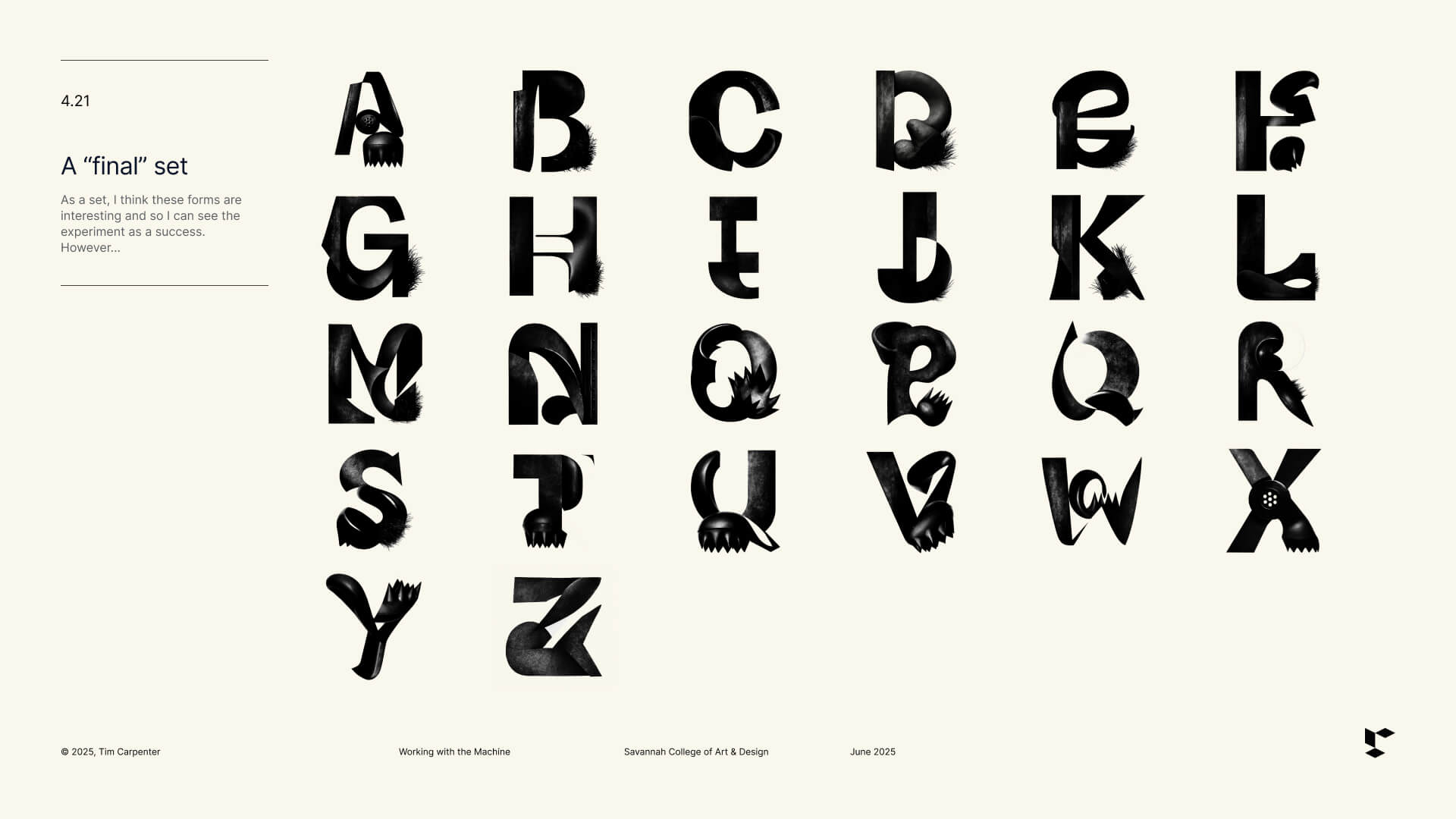

Much of my work for this lecture grew out of earlier analog experiments — like Kindness Shines Brightest from Within and Exploring the Poetic Gap. To prepare for the talk, I wondered whether AI could collage “as well as I could.” To test this idea, I showed ChatGPT a typographic form (the letter ‘a’) that I had assembled. This gave me a sense of how ChatGPT interpreted instructions and where chance might enter the process. I also wanted to create my own source material — not gather images from the web. I collected small black objects from around our apartment — bowls, silicone containers, phone parts — and photographed them against a simple white background. These became the raw material the AI could use to collage, distort, or lightly reinterpret, though I often had to remind it not to stray too far from the original elements. I liked the idea that the algorithm wasn’t inventing forms out of nowhere or pulling from other artists’ work — which is a legitimate concern for many of us — but was instead recombining fragments from my everyday life. When possible, I try to oscillate between the analog and digital worlds, pushing and pulling on the strengths and limitations of each. It’s something Martin Venezky taught me at CCA, and it continues to shape my personal work. I rarely get to pursue these ideas in my everyday "real job"… but I digress.

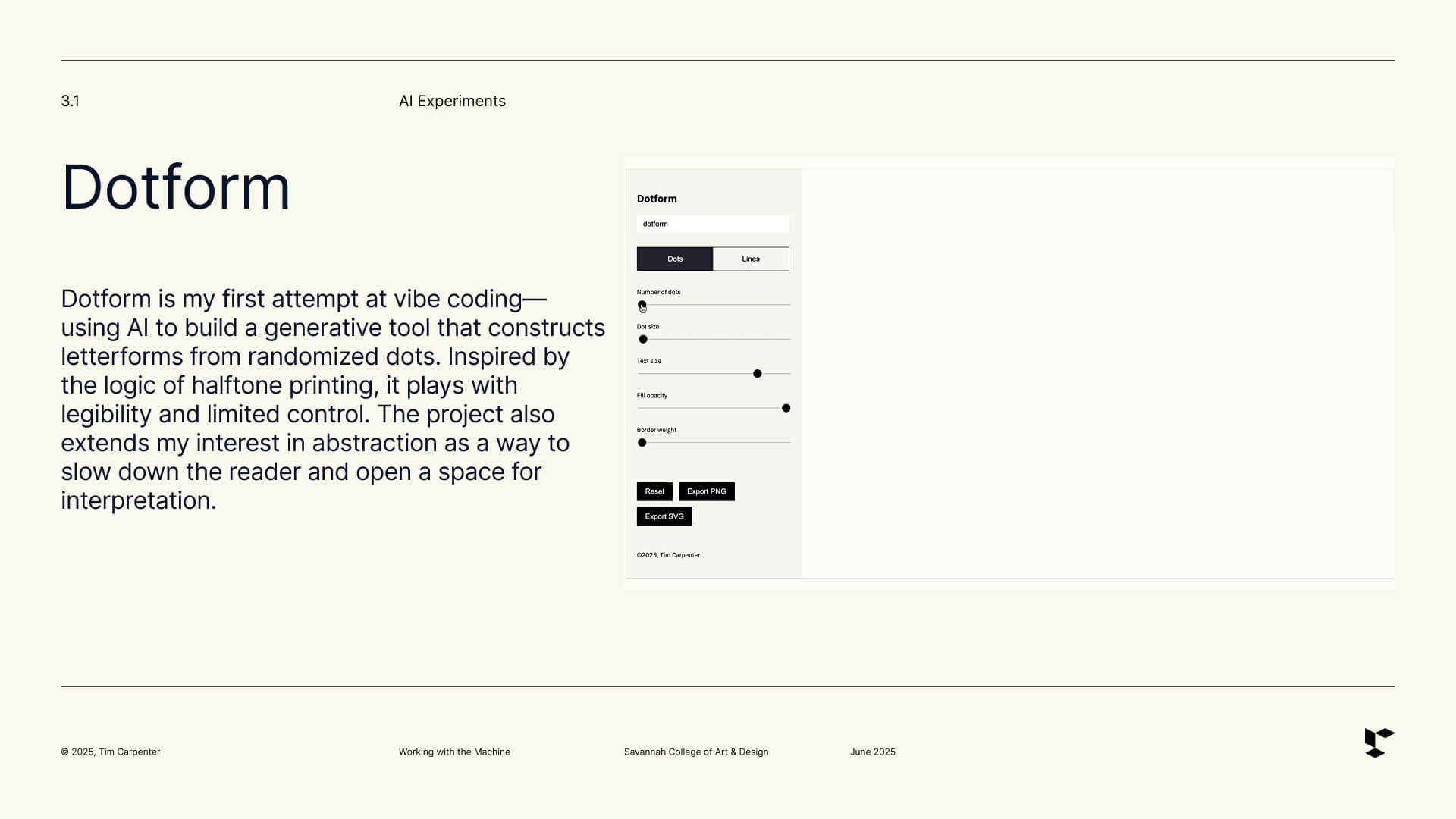

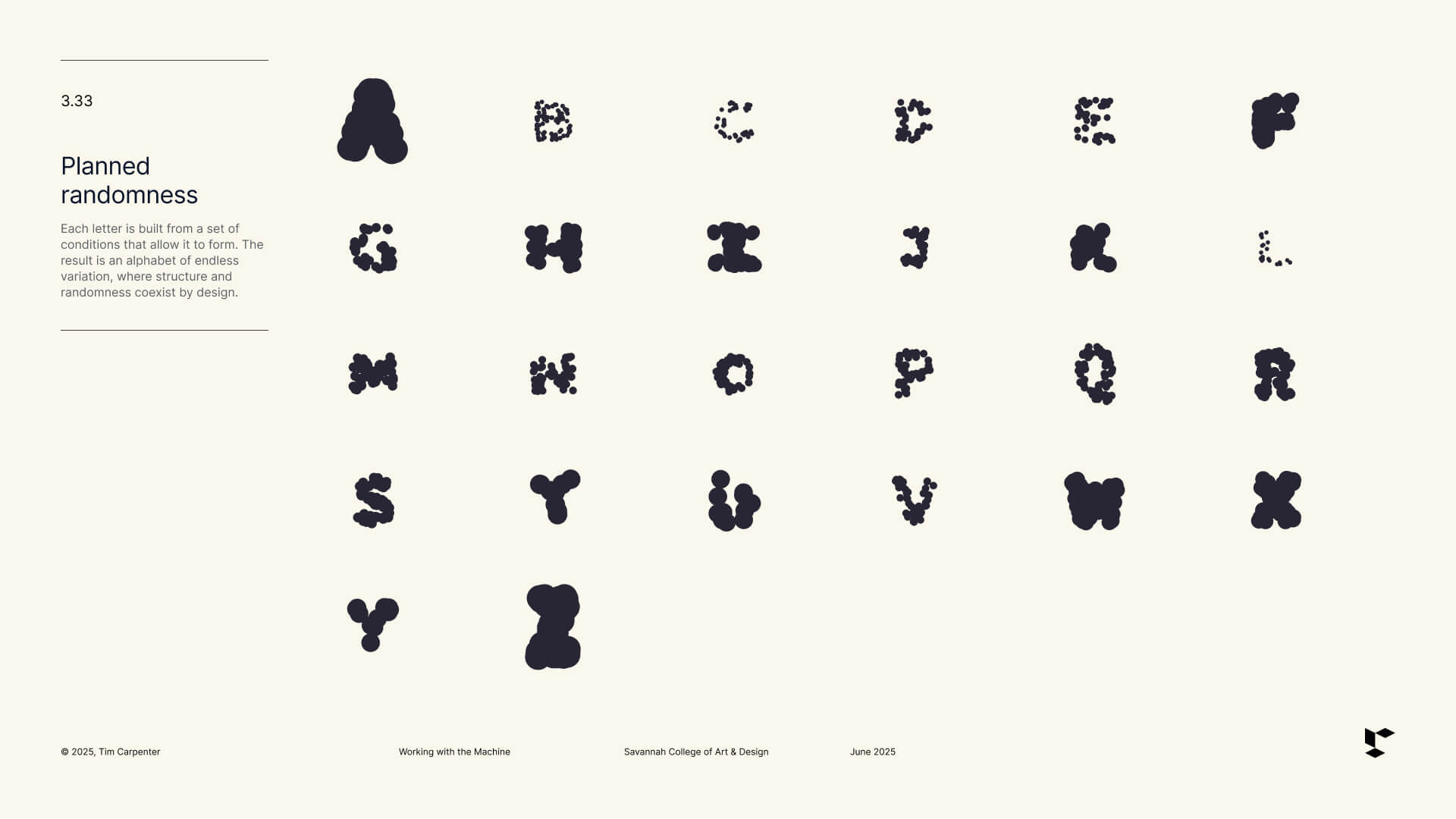

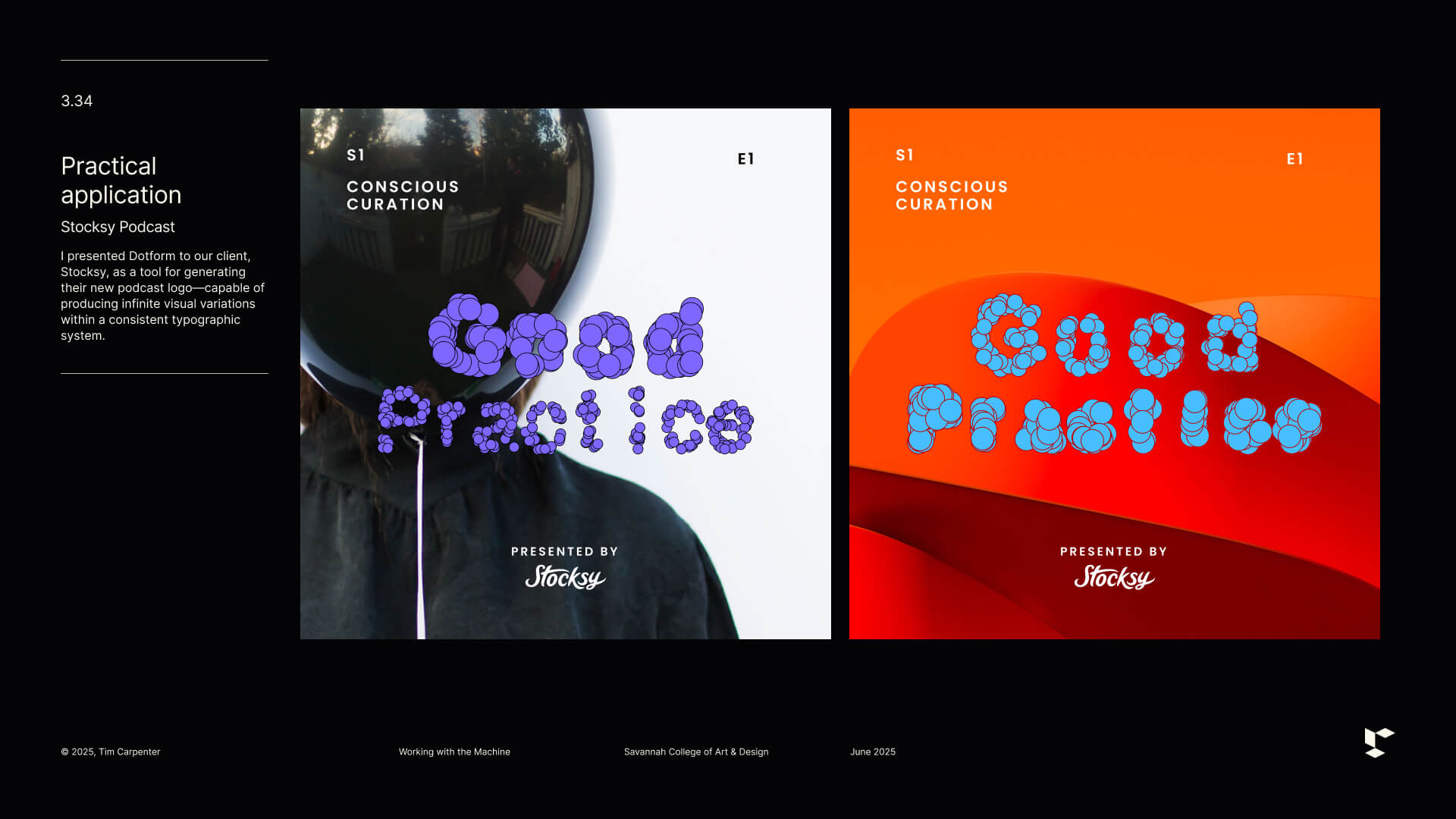

For the lecture, I shared two recent experiments — DotForm and Forma Obscura. Each began with an “I wonder if…” and each continued that analog–digital back-and-forth running through my work. I’ll write about both in separate posts, but for the lecture they served as simple demonstrations of how small conceptual prompts can unfold into larger projects. DotForm began by asking ChatGPT to help me craft a tool to place dots along letterforms with built-in controls for randomness and legibility. Forma Obscura explored AI’s ability to craft letterforms from materials I supplied — essentially building a custom tool for exploring form. In both cases, the machine acted as a purpose-built partner in introducing unpredictability into my work.

The lecture itself, I was told, went well — and like many of the talks I’ve given over the years, I was left feeling inspired to continue my self-initiated inquiries. From the students’ questions and post-lecture comments, there does seem to be a growing desire to understand how to work with AI without losing one’s soul, to borrow a phrase from Adrian Shaughnessy. Here in San Francisco, it’s all anyone seems to talk about. Beyond the feverish clamor to rebrand every company as an AI company and make as much money as possible, there is, thankfully, a real concern among some about the direction the industry is heading and whether we should be using these tools at all. That tension — between curiosity and caution, between possibility and unease — has me questioning how to move forward. AI is capable of amazing things, but do we fully understand the cost?

Because alongside the creative satisfaction, there are larger, more uncomfortable questions I continue to grapple with. Big tech’s influence on society and politics. The unchecked rise of monopolies. Data harvested without our consent. The accelerating drift toward what some call technofeudalism, where a handful of companies rent us everything — from music to data protection — for monthly fees that quietly drain us. All of it traded for faster code, instant images, and the convenience of conversation with a machine. What happened to the early promises of AI: curing diseases, reducing inequality, addressing the climate crisis? Instead, we’ve ended up with a growing oligarchy, pervasive surveillance systems, and a creeping suspicion that this might all be a bubble.

So I find myself in a complicated place. Do I continue to use these tools? Suffice it to say, I’m not sure. Do any of us have a choice anymore?

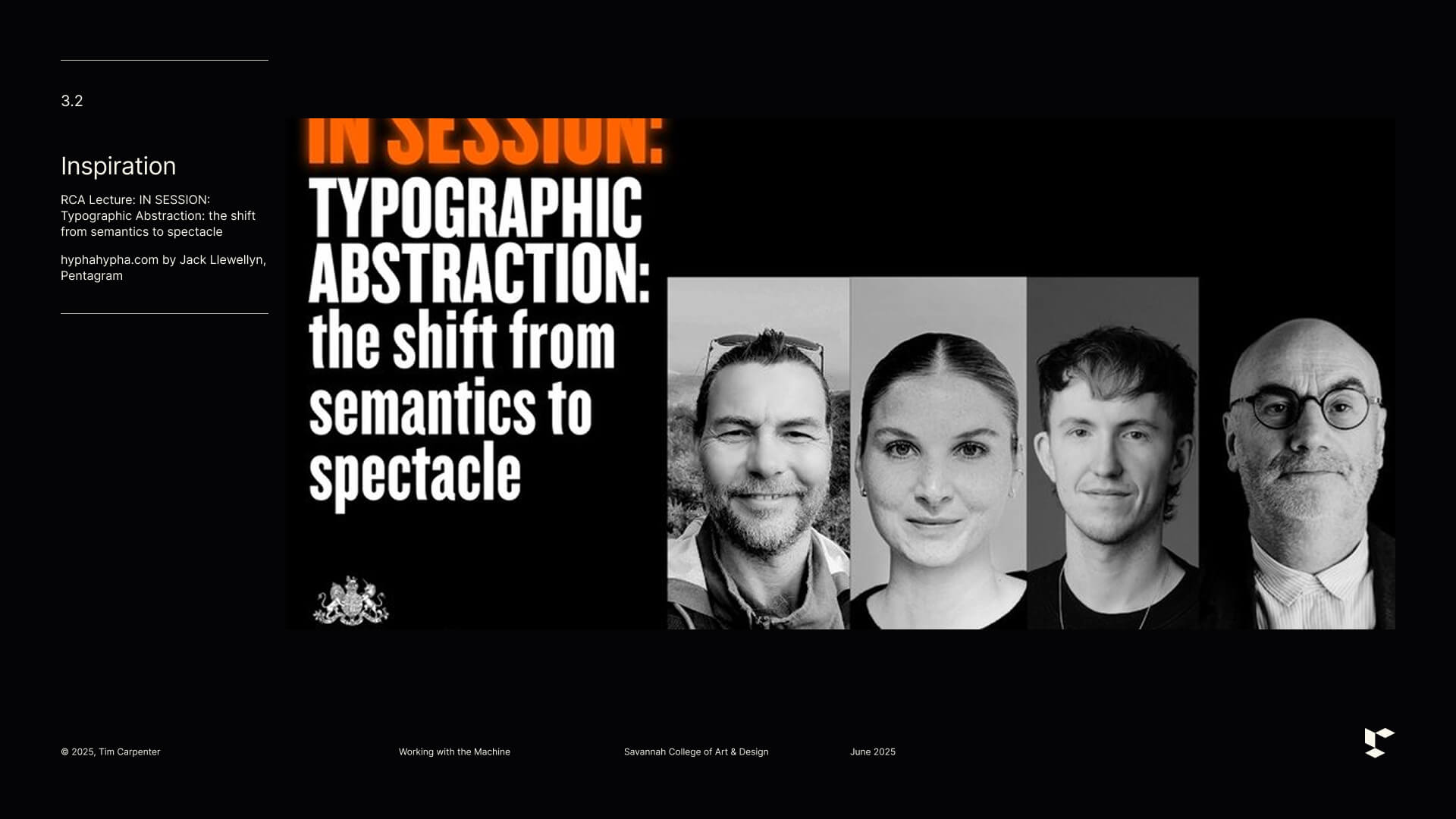

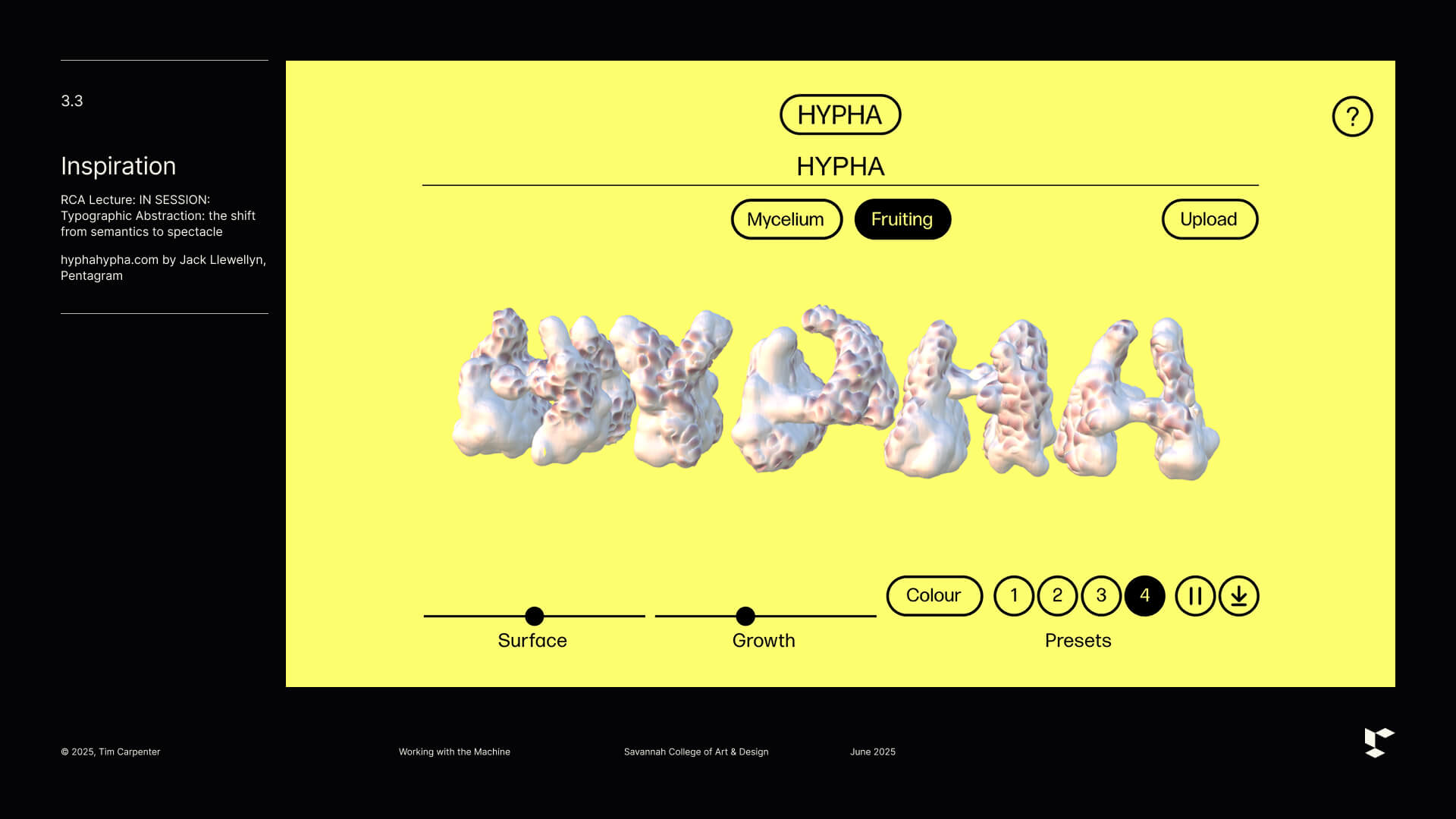

Below are a few images from the presentation, including some of the experiments I discussed.